We ran a GenAI experiment at iF. Here are some of our takeaways.

Since the release of ChatGPT-3 in November, the media has been buzzing with conversations and commentary on the potential of Generative AI (GenAI). Almost everyone has had something to say about its potentials and pitfalls, from legacy consultancies to small boutiques, as well as a new class of “prompt engineering” thought leaders and GenAI entrepreneurs.

At iF, we have also pondered similar questions: How might this technology impact our workflows and client projects? Where are the real disruptors to the clients and industries we support? And, how might GenAI impact how we generate value and impact for our clients?

In our quest to answer these questions, we’ve sought to cut through the noise to understand how GenAI might add value to our work today and where there are challenges or concerns in leveraging this technology.

The Experiment

In that spirit, we set out to explore the use of GenAI in our work through a month-long company-wide experiment. We chose two main tools - ChatGPT and DALL-E – for iFsters to integrate into their everyday workflows, though iFsters were encouraged to experiment with other tools as well.

Our process for developing and running this experiment included:

- Creating a benchmarking survey to understand iFsters’ current awareness of and comfort with GenAI tools

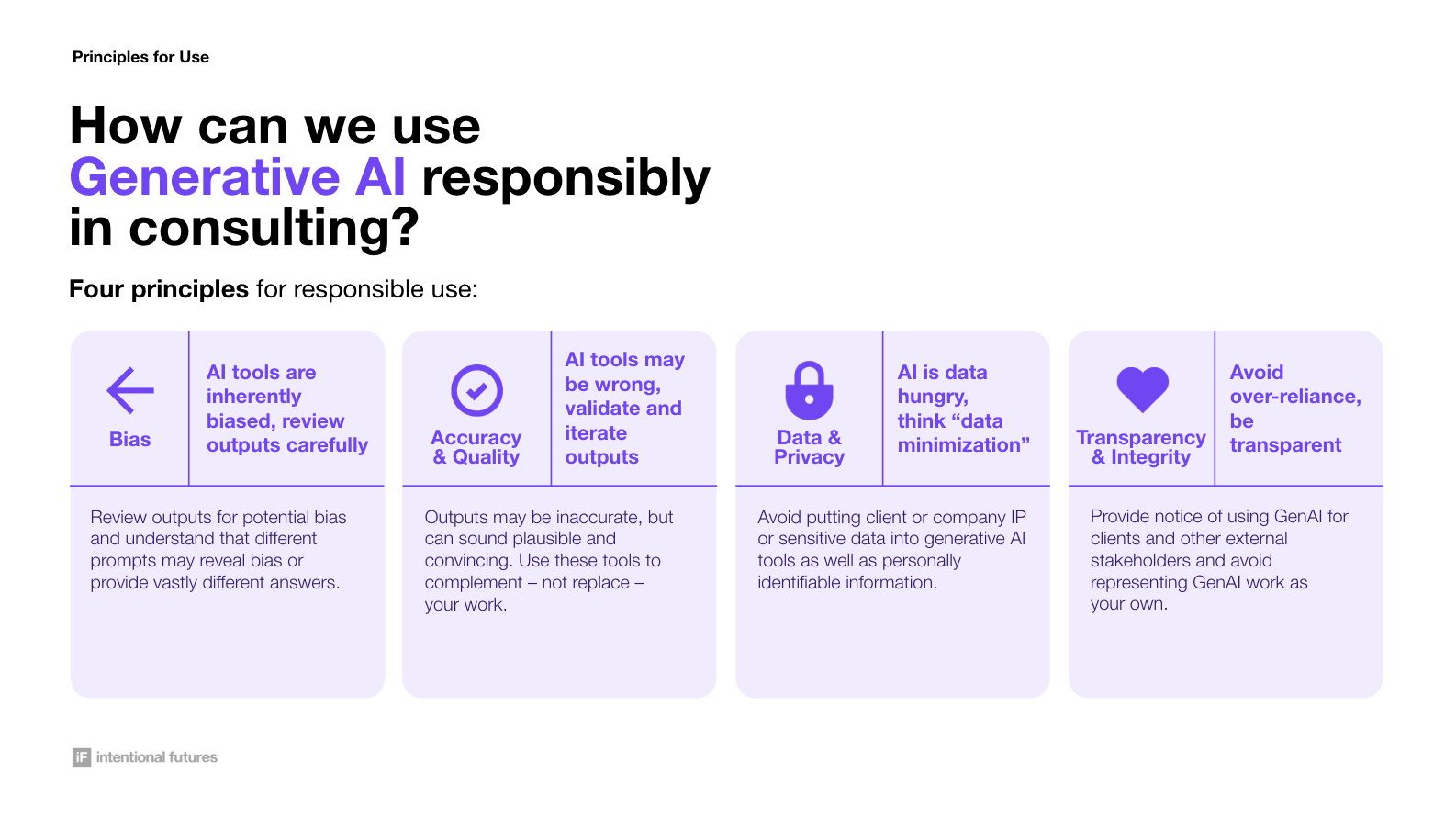

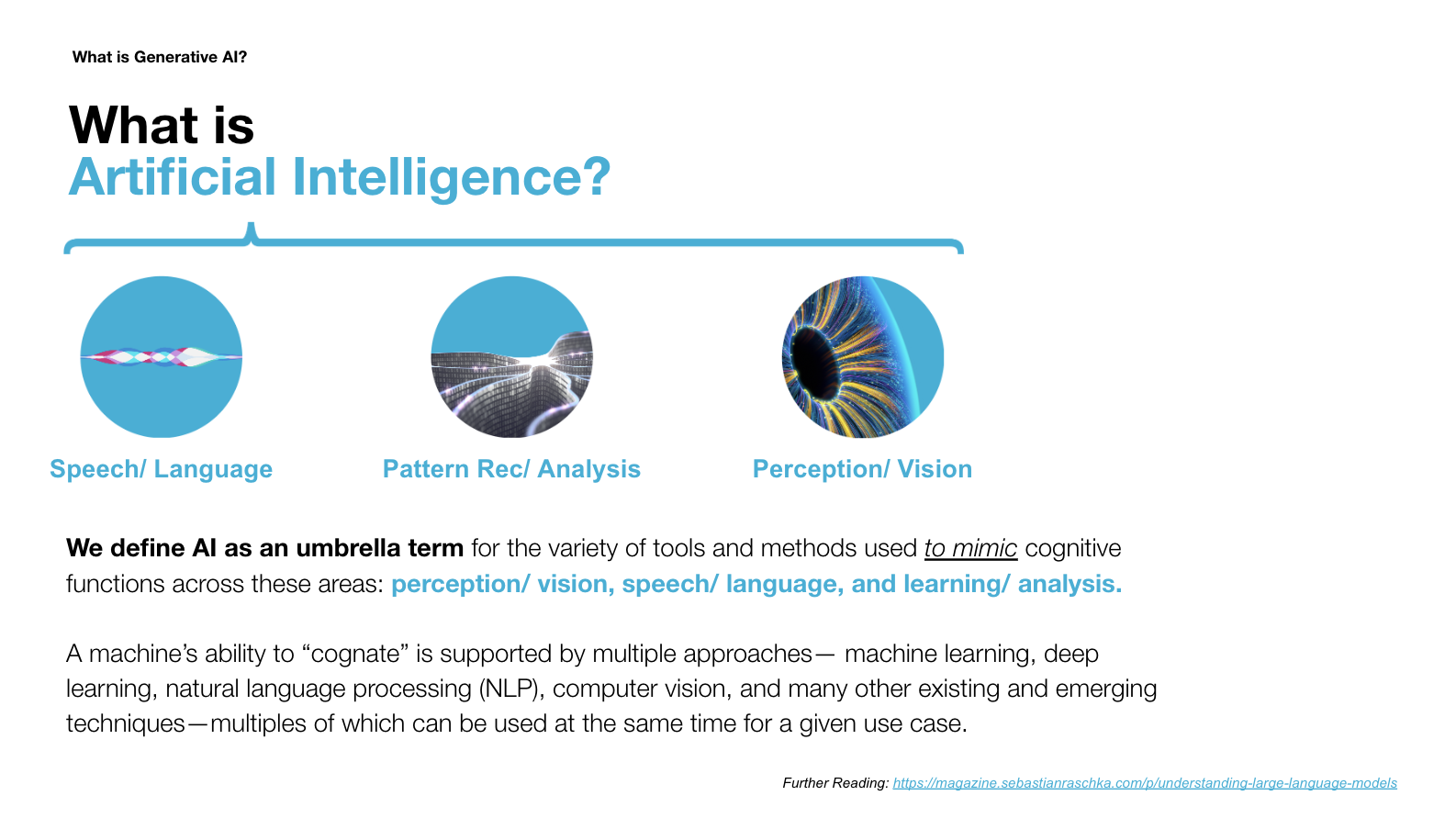

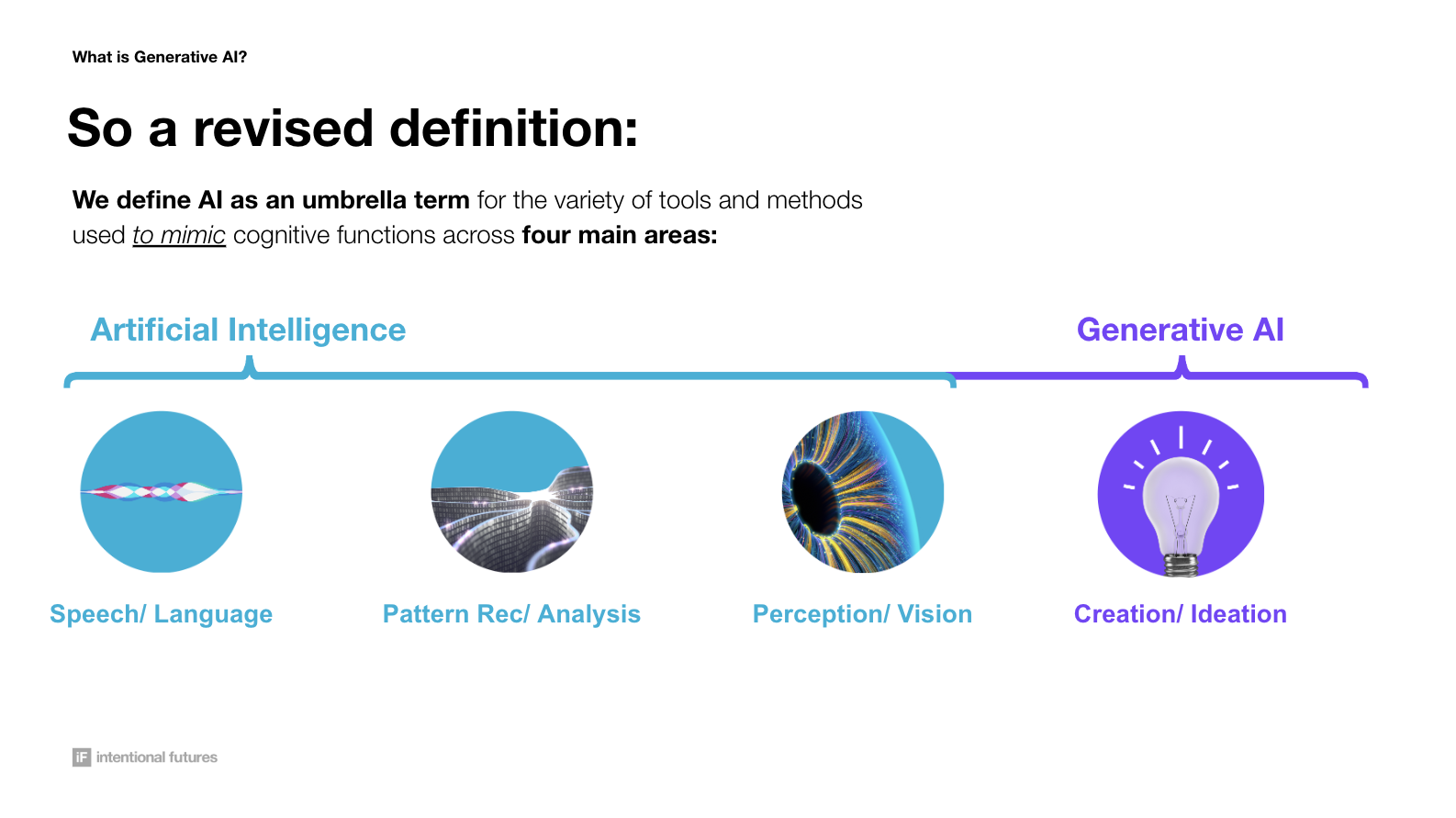

- Facilitating a 1-hour crash course on GenAI to get iFsters up to speed on what it is (and is not) and how to responsibly use GenAI on our work

- Identifying a set of use cases to focus the experiment on based on iFster input

- Capturing, and incorporating, learnings as we went via a weekly survey

While we still have a lot to learn – and more to experiment with – below, we offer some initial lessons and takeaways.

Lessons and Learnings

Generative AI is best used to augment - not replace - our work. iFsters found GenAI, especially tools like ChatGPT and Bard, helpful when summarizing, drafting, or providing ideas for topics with which they were already familiar. Some iFsters reported huge time savings in this area, with tools like ChatGPT helping them accomplish weeks-worth of research in only a few days.

Trust is a barrier to broader applicability. iFsters quickly ran into roadblocks when trying to use these tools for work that required higher levels of accuracy. They reported instances of “AI hallucination,” widespread inaccuracies, and inconsistency and unoriginality in outputs. This limits the broader applicability and usefulness for many tasks, and ultimately led to frustration and hesitation around using these tools.

Human oversight is still important. iFsters were at times surprised – and disappointed – with the level of oversight needed to use these tools responsibly and productively. Specifically, iFsters found that drafting and iterating prompts, and reviewing and revising outputs for accuracy, timeliness and client-, market-, or industry-specific context could take longer than doing it on their own.

Summary

Ultimately, in order for GenAI to gain wider adoption and applicability to our own work, there are three important issues that need to be addressed:

- Accuracy and reliability of outputs

- Up-to-date and context aware search capabilities

- Seamless integration into everyday tools and processes

What’s Next for iF and GenAI

Our experiment revealed a wide range in trust in and perceived helpfulness of using GenAI tools in our work. Our hypothesis is that part of the difference in experiences is related to individual comfort with, expectations of, and willingness to experiment with these tools. While we provided an initial training on what GenAI is and how we can use it in our work, this first phase was largely centered around self-guided exploration, as well as trial and error.

Moving forward, we are curious to dig into these differences and uncover best practices that can be shared across our organization. We hope to establish processes that help to standardize the use of these tools, so that we can take advantage of their potential while mitigating the risks to our work’s quality, integrity, and accuracy. We anticipate that this process will involve additional training, forums for further exploration, and more intention around capturing and communicating best practices.

As we continue to explore the use of GenerativeAI, we are also curious to learn from other organizations’ and individuals’ experiences:

- What use cases, if any, have you found GenAI suitable for?

- How are you striking a balance of potential newfound efficiencies with quality, accuracy and transparency?

- What threats or risks are you paying most attention to, and how are you proactively addressing them?